Now Reading: Advanced Python Synthesizer

1

-

01

Advanced Python Synthesizer

Advanced Python Synthesizer

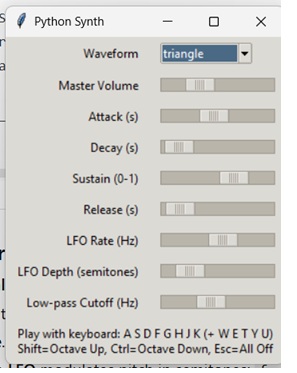

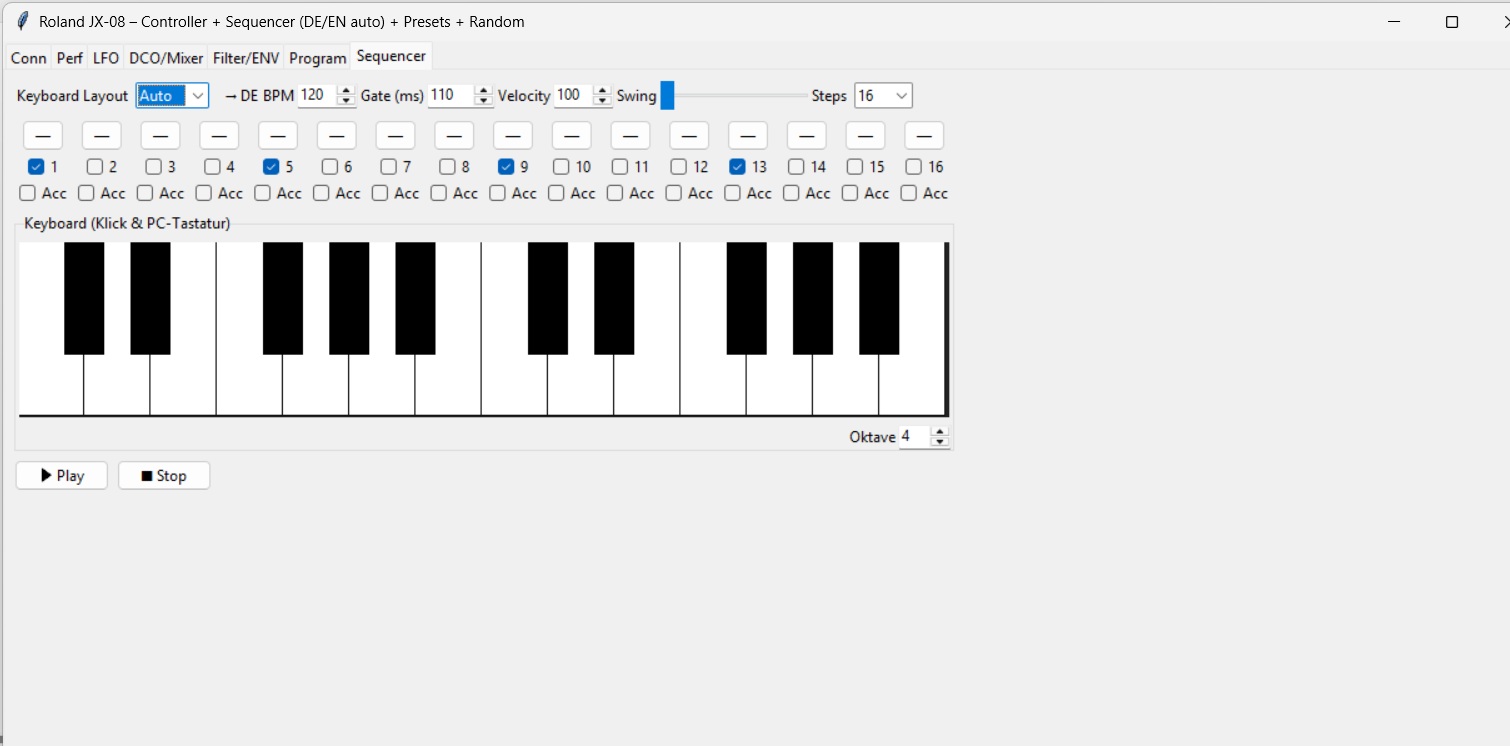

Virtual piano (clickable) drawn on a Tkinter Canvas

Wave recorder (save to WAV) — records the synth’s mixed output

Presets: Soft Pad, Chiptune Lead, Bass

The earlier features: waveforms, polyphony, ADSR, LFO vibrato, low-pass filter, master volume

VST support (practical path) via MIDI-out to an external VST (DAW or Carla) using mido + python-rtmidi (details below)

Requirements

pip install sounddevice numpy soundfile mido python-rtmidi

Linux might need:

sudo apt-get install portaudio19-dev

Windows may need MSVC Redistributable.

How the new bits work

- Virtual piano: draws white/black keys on a

Canvas, maps each rectangle to a MIDI note, and handles mouse down/drag/up to triggernote_on/note_off(with visual pressed-state). - Recorder: the synth’s audio callback calls

capture_cb(buf); a backgroundRecorderwrites those float32 blocks to a WAV file (16-bit) using soundfile. - Presets: a dict of parameter sets (waveform, ADSR, LFO, cutoff, master_gain). Selecting a preset updates the synth live.

- MIDI-out to VST: enable the checkbox, pick a MIDI output port (e.g., “loopMIDI Port” on Windows, “IAC Driver” on macOS, or a Carla/DAW virtual input). The app sends

note_on/note_offto your VSTi while muting internal audio notes (so you hear the plugin instead).

About “VST support” in Python (important reality check)

- Hosting VST/VST3 plugins directly inside Python isn’t practical with pure Python today (it requires the Steinberg VST3 SDK and a C++ host like JUCE, plus bindings).

- The most reliable Python route is what we implemented: send MIDI to a VST host (DAW, Carla, Cantabile, MainStage, etc.). That gives you the same musical result with a clean, Pythonic control surface.

- If you truly need an embedded plugin host, consider:

- Writing a minimal JUCE app (C++) and exposing a socket/OSC/HTTP control API to Python, or

- Using Carla as an external plugin host (it has OSC; Python can control parameters/presets via python-osc), or

- Building pybind11 bindings around a lightweight C++ VST host (advanced).

Previous Post

Next Post

Loading Next Post...